As AI accelerates data center growth nationwide, we analyze the regional cost and infrastructure trade-offs of powering these facilities with clean, reliable energy across eight U.S. locations. Image courtesy of NREL.

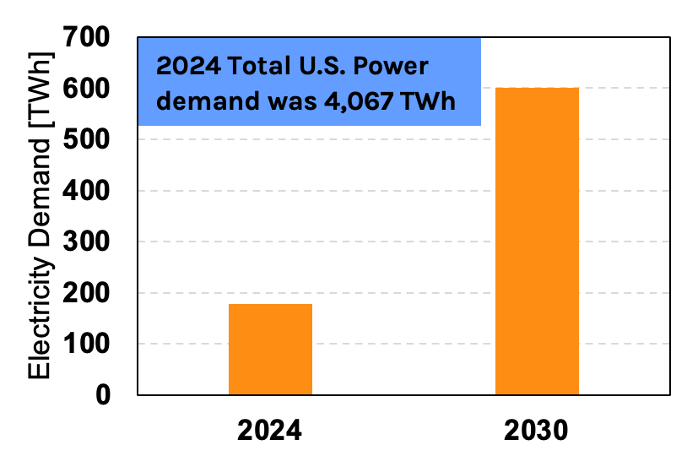

As the AI revolution accelerates, data centers have become the digital heart of our economy. But this digital expansion comes with a significant energy and thus carbon cost. In 2024, U.S. data centers consumed approximately 178 TWh of electricity—a figure projected to soar past 600 TWh by 2030. By then, data centers could account for a staggering 12% of total U.S. power demand. In parallel, power capacity is expected to double, rising from around 17 GW in 2022 to 35 GW by the end of the decade. A key driver behind this growth is the increasing deployment of AI-optimized data centers, which can consume three to five times more power per rack compared to traditional setups.

Figure 1: Data centers electricity demand in the US in 2024, and projected demand in 2030.

This explosive growth raises a critical question:

How do we power this surge sustainably and reliably across vastly different regional energy landscapes?

The Clean Power Challenge: One Size Does Not Fit All

Today’s AI and cloud data centers are heavily concentrated in a handful of established hubs—such as Northern Virginia, Dallas-Fort Worth, Phoenix, and Silicon Valley—where robust grid infrastructure, fiber connectivity, and proximity to hyperscale users have historically driven clustering. However, with the exponential rise in power demand and increasingly constrained local grids, this concentration is becoming unsustainable. Looking ahead, data center growth is expected to disperse across the country, expanding into regions like the Midwest, Southeast, and Pacific Northwest, where land, renewable energy potential, and permitting conditions offer more favorable development opportunities. This geographic diversification will require a regionally tailored power strategy—one that aligns siting decisions with local grid capacities, renewable resource availability, and decarbonization goals. A one-size-fits-all approach is no longer viable; enabling the next generation of AI infrastructure will depend on optimizing energy planning at the regional level to ensure resilience, cost-efficiency, and climate alignment.

Figure 2: Data center infrastructure in the United States in 2025. Courtesy of NREL.

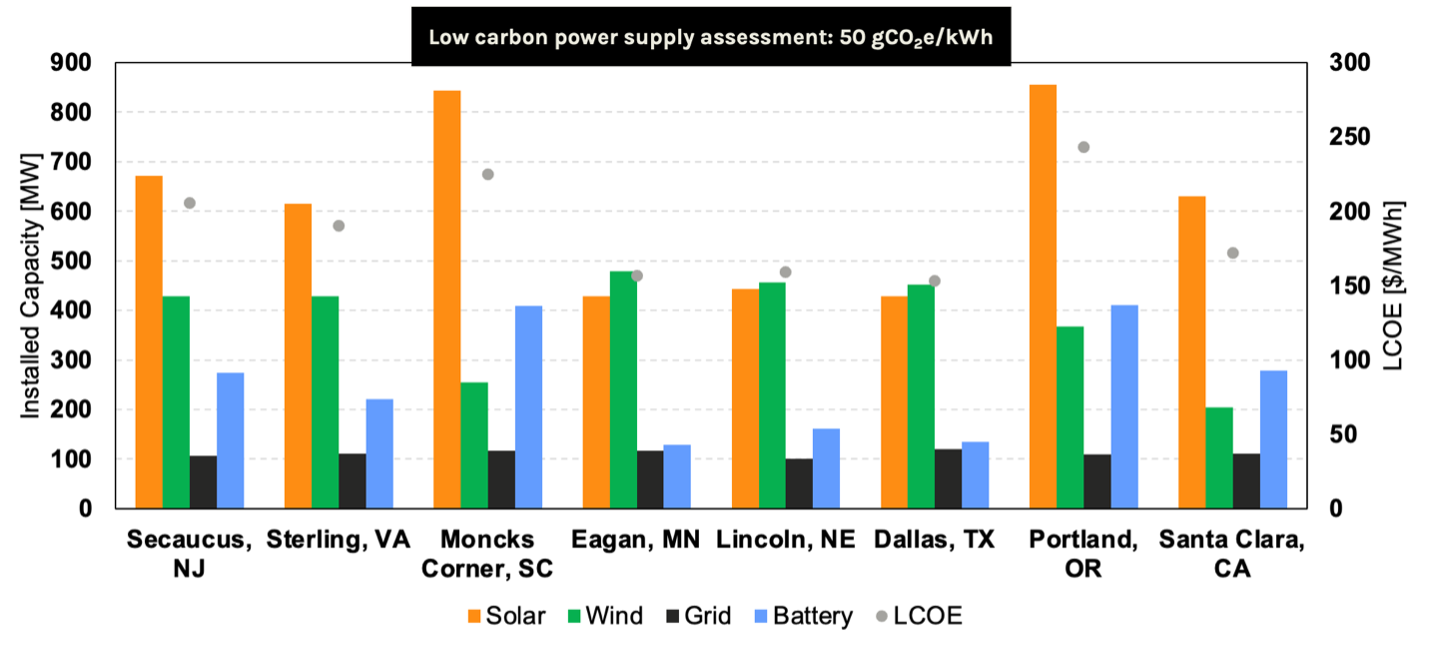

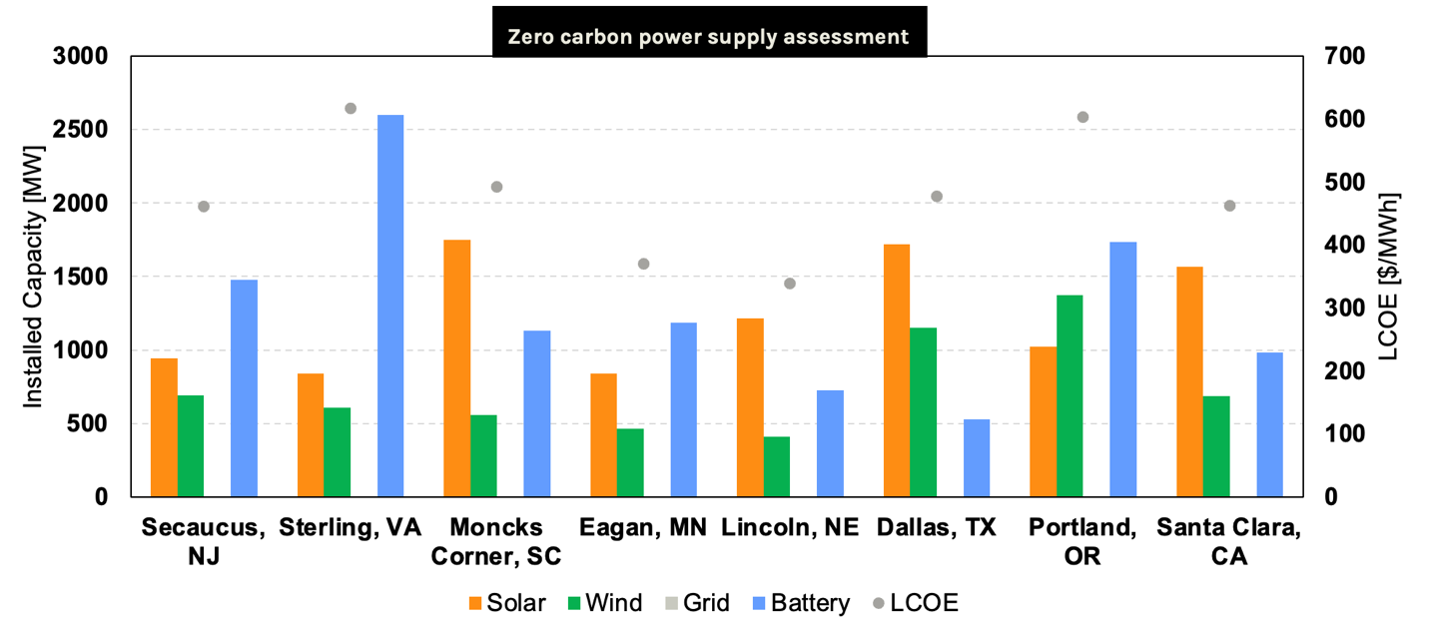

To evaluate how regional differences impact the feasibility and emissions intensity of powering AI data centers, we conducted a comparative case study using Sesame’s data center power system modeling tool. The analysis focused on eight U.S. regions and explored two decarbonization targets: 50 gCO2/kWh and 0 gCO2/kWh. Each scenario was modeled using a limited but realistic set of technologies—grid power, solar, wind, and battery storage—to reflect common constraints in siting and procurement. The results reveal stark contrasts across regions in both cost and system composition, highlighting the critical importance of region-specific power strategies as data center expansion accelerates. This comparative view provides insight into where clean power integration is already viable—and where targeted infrastructure or policy support will be needed to meet rising AI loads sustainably.

Texas and the Midwest (including states like Minnesota and Nebraska) emerge as the most cost-effective regions for powering low-carbon data centers, with Levelized Cost of Electricity (LCOE) estimates ranging from $153 to $159 per MWh. These regions benefit from abundant renewable resources and relatively low variability, making them more attractive for large-scale clean energy integration. However, even in these favorable zones, low-carbon solutions remain 2–3 times more expensive than current grid power, highlighting the steep economic gap that still exists for clean energy deployment at the scale demanded by AI infrastructure.

Figure 3: Optimized behind-the-meter asset capacities and levelized cost in the low-carbon power supply assessment.

To illustrate the practical implications, we modeled a 150 MW data center using hourly demand curves under two decarbonization scenarios. In the low-carbon scenario (~50 gCO2e/kWh), sites require up to 850 MW of solar capacity and 400 MWh of battery storage, alongside 100–120 MW of grid backup. While effective in reducing emissions, the high LCOE presents a major barrier. In the zero-carbon scenario, infrastructure needs increase dramatically: solar capacity must grow by 50–100%, battery storage by 5–10×, and costs rise 2–3× above the low-carbon case. Without breakthroughs in long-duration storage or clean firm power sources—such as small modular reactors (SMRs) or geothermal energy—fully decarbonized data center operations remain out of economic reach. These findings emphasize four core takeaways: the scale of AI-driven demand, the critical need for regional energy strategies, the magnitude of infrastructure investment required, and the central role of energy storage as both a technical and economic bottleneck.

Figure 4: Optimized behind-the-meter asset capacities and levelized cost in the zero carbon power supply assessment.

As we plan for the electrification of transportation, industry, and buildings, it’s critical to remember that all of these sectors draw from the same underlying energy system. The grid capacity, renewable generation potential, and storage technologies needed to decarbonize AI data centers are also essential for scaling EV charging, industrial heat, and residential and commercial power demand. While data centers may have the financial means to pay a green premium, their rapid and concentrated load growth could strain local and regional energy systems, potentially crowding out other decarbonization priorities. This is no longer a speculative concern—these trade-offs are already beginning to emerge in grid planning and interconnection queues across the U.S., and must be addressed proactively to ensure an equitable and balanced energy transition.

Finally, it’s worth acknowledging that the responsibility for sustainable digital infrastructure doesn’t lie solely with developers or utilities—it also extends to end users. For this article, we generated an AI-generated image purely for demonstration. At Sesame Sustainability, we believe that small actions at scale create transformative impact, so we calculated the footprint of this seemingly minor step: creating just six AI images consumed approximately 0.024 kWh of electricity, resulting in ~24 grams of CO2 emissions. On its own, that may seem negligible. But scaled globally—across millions of daily image generations, chatbot queries, and model inferences—these emissions accumulate rapidly. It raises an important question: Did the added value justify the energy use? As AI becomes increasingly embedded in our lives, these micro-decisions will shape the carbon profile of the digital age.

Key Takeaways

This analysis highlights several key aspects related to powering AI data centers sustainably:

• Regional energy strategies are critical—Texas and the Midwest offer the most cost-effective pathways for low-carbon data center operations.

• Even in favorable regions, clean energy solutions remain 2–3 times more expensive than current grid power, creating significant economic barriers.

• Zero-carbon scenarios require massive infrastructure investments, including 5–10× more battery storage capacity than low-carbon alternatives.

• Energy storage emerges as both a technical enabler and economic bottleneck for data center decarbonization.

• The competition for clean energy resources between data centers and other sectors requires proactive planning to ensure an equitable energy transition.

At Sesame Sustainability, our mission is to equip stakeholders across the energy and digital ecosystems with the tools to make smarter, faster, and more transparent decisions. Our modeling platform integrates high-resolution techno-economic analysis with region-specific energy data, enabling hyperscalers, utilities, and local authorities to evaluate trade-offs, assess decarbonization pathways, and optimize infrastructure investments. Whether it’s identifying the most viable regions for AI data center expansion, forecasting emissions and reliability outcomes under different energy mixes, or supporting grid planning and permitting strategies, Sesame provides the clarity needed to act decisively in a rapidly evolving landscape. As the stakes of the energy transition rise, precision, transparency, and collaboration will be key—and Sesame is here to help lead the way.

All results obtained above were generated via Sesame’s software platform. Discover how Sesame Sustainability can transform your emissions and cost modeling processes! Contact us today to learn how Sesame can be leveraged to fit your unique needs. To learn more, visit Sesame Sustainability.